Why Data Center Cooling is Critical to Modern Infrastructure

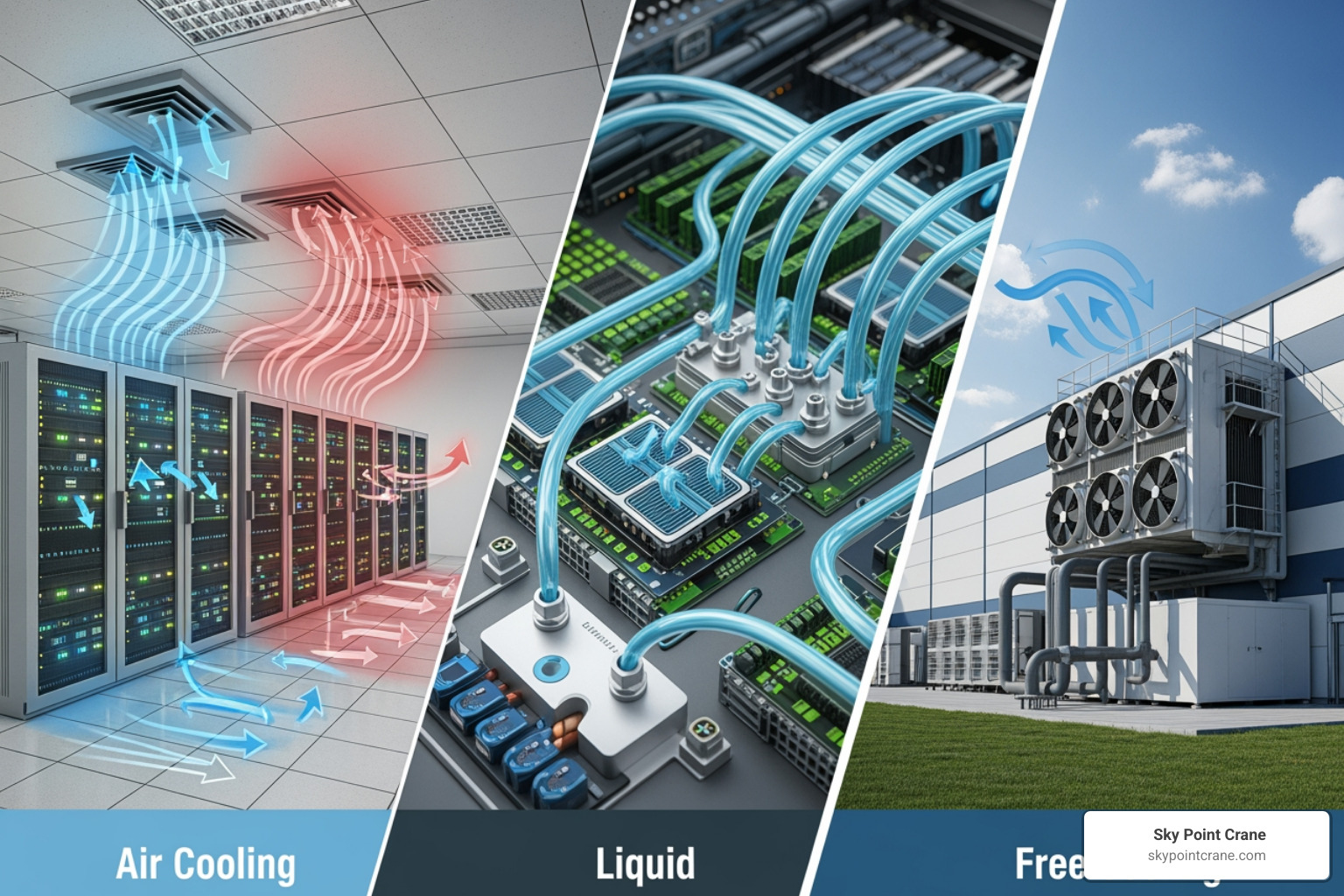

Data center cooling is the process of removing heat generated by IT equipment to maintain safe operating temperatures and ensure continuous uptime. The primary methods include:

- Air Cooling: Traditional CRAC/CRAH units with hot/cold aisle containment (PUE: 1.4-1.5)

- Liquid Cooling: Direct-to-chip or immersion systems for high-density environments (PUE: 1.1-1.2)

- Free Cooling: Using outside air or water when temperatures permit (10-13% energy savings)

- Hybrid Systems: Combining multiple approaches based on rack density and climate

Key optimization strategies: Implement hot/cold aisle containment, optimize temperature setpoints to 70-75°F, deploy economizers, and consider liquid cooling for high-density racks.

The numbers tell a stark story. Cooling systems account for approximately 40% of energy consumption in data centers. As artificial intelligence and big data processing demands explode, server densities are climbing past the 280-watt thermal design power threshold where traditional air cooling struggles. By 2030, data centers are projected to consume between 3-13% of global electricity—a massive increase from just 1% in 2010.

This is a critical business problem. Every degree of overcooling wastes money, every inefficient design increases your Power Usage Effectiveness (PUE) and operational costs, and every hour of downtime due to thermal issues can cost thousands or millions. The challenge is balancing 100% uptime with cost management and sustainability targets, all while planning for tomorrow’s higher-density equipment.

The good news is that cooling technology has evolved dramatically. Liquid cooling systems are achieving PUEs as low as 1.1, free cooling can slash energy use, and AI-powered systems are optimizing cooling in real-time. This guide breaks down everything you need to know about modern data center cooling—from the fundamental heat problem to comparing technologies and implementing optimization strategies.

I’m Dave Brocious, and I’ve spent over 30 years solving complex infrastructure challenges, including the specialized rigging and crane services required for installing data center cooling equipment safely and efficiently. My work with Sky Point Crane has given me a front-row seat to how proper infrastructure planning directly impacts project success and long-term operational efficiency.

Let’s explore how you can build and maintain the coolest, most efficient data center possible.

The Heat Problem: Why Modern Data Centers Need Advanced Cooling

Our digital world runs on data centers, but all this computational power generates a significant byproduct: immense heat. If not properly managed, this heat can lead to equipment failure, reduced performance, and costly downtime. In some data centers, more than 50% of the total power consumption goes directly into cooling the IT equipment, making effective data center cooling a core component of any efficient operation.

The challenges are mounting. We’re seeing rising rack densities, where more powerful equipment is packed into smaller footprints. Data centers are projected to use 3-13% of global electricity by 2030, a significant jump from 1% in 2010. This brings increased environmental and regulatory pressures to improve sustainability, alongside growing concerns about water usage, as many traditional cooling methods are water-intensive.

Understanding Today’s Data Center Cooling Challenges

The primary challenge in data center cooling is balancing 100% continuous uptime with aggressive energy efficiency goals. This is complicated by the increasing thermal design power (TDP) of modern processors. High-performance computing (HPC) and AI are pushing processor TDPs well beyond 280 W, a threshold where traditional air cooling struggles, and are expected to surpass 700 W by 2025. Yesterday’s cooling solutions simply won’t work for tomorrow’s demands.

Running cooling systems 24/7 is expensive, and inefficient systems inflate operational expenses. Furthermore, in many regions, including parts of our service areas in Western and Central Pennsylvania, Ohio, West Virginia, and Maryland, water scarcity is a growing concern. Traditional evaporative cooling towers can consume significant amounts of water, impacting both the environment and operational costs.

The Hyperscale Dilemma

Hyperscale data centers, which house thousands of high-density servers, face amplified cooling challenges. These facilities have massive, concentrated heat loads that demand sophisticated thermal management. For the enterprises relying on them, continuous uptime is an absolute requirement with no room for error.

Achieving energy efficiency at such a massive scale is a daunting task. In facilities exceeding 10 MW, even small percentage gains in cooling efficiency translate into substantial savings. This often requires custom cooling configurations and advanced heat rejection strategies, pushing the industry away from traditional room-based cooling and toward more localized methods like liquid cooling.

A Comparative Guide to Data Center Cooling Technologies

Choosing the right cooling technology depends on your IT equipment density, local climate, budget, and sustainability goals. Let’s walk through the main options.

| Cooling Technology | Efficiency (PUE) | Cost (CapEx/OpEx) | Water Usage (WUE) | Ideal Use Case |

|---|---|---|---|---|

| Air Cooling | 1.4 – 1.5 (avg) | Moderate | Low to Moderate | Lower density, existing infrastructure |

| Liquid Cooling | 1.1 – 1.2 (avg) | High | Low to Zero | High-density, HPC, AI |

| Free Cooling | Varies (very low) | Moderate | Very Low to Zero | Cooler climates, hybrid systems |

Traditional Air Cooling

For decades, air cooling has been the standard. It uses Computer Room Air Conditioner (CRAC) or Air Handler (CRAH) units to push cold air, often through a raised floor, to server racks. The core principle is hot/cold aisle containment, where racks are arranged to separate cold air intakes from hot air exhausts, preventing mixing and improving efficiency. The challenge is that air is a poor thermal conductor. As server densities increase, moving enough air to remove heat becomes inefficient, creating hot spots and consuming more power. Traditional air-cooled data centers typically have a Power Usage Effectiveness (PUE) of 1.4 to 1.5, making it a practical but limited choice for lower-density environments.

The Rise of Liquid Cooling

When air cooling isn’t enough, liquid cooling is the answer. Water has a heat removal capacity approximately 37% higher than air, allowing it to cool high-power components much more efficiently. As processor TDPs climb past 280 watts, liquid cooling is becoming a necessity.

- Direct-to-chip cooling uses cold plates mounted on CPUs and GPUs to absorb heat at the source. It has seen explosive growth, with some reports showing a 65% year-over-year increase in adoption.

- Immersion cooling submerges entire servers in a non-conductive dielectric fluid, which absorbs heat directly from all components. This can reduce a data center’s physical footprint and eliminates dust and corrosion issues.

- Rear door heat exchangers replace a rack’s rear door with a chilled-water coil, cooling hot exhaust air before it re-enters the room. This is an effective retrofit solution.

Liquid cooling systems can achieve a PUE of 1.1 to 1.2 and an Energy Saving Rate of 45-50%, a substantial improvement over air cooling. For high-density racks, HPC, or AI, it’s often the only viable option. A comprehensive review of liquid cooling explores this technology in greater depth.

Using Nature: Free Cooling and Economizers

Free cooling uses cool outdoor air or water to reduce or eliminate the need for mechanical refrigeration, offering energy savings between 10.2% and 13.1%.

- Air-side economizers bring filtered outside air directly into the data center when temperatures are low enough.

- Water-side economizers use cool outdoor temperatures to chill water in cooling towers or dry coolers, which then circulates through the facility.

- Adiabatic cooling passes outside air through a wet medium, where water evaporation cools the air before it enters a heat exchanger. These systems use up to 90% less water than traditional evaporative towers.

Free cooling is most effective in climates with cool temperatures, like those found in Western Pennsylvania or Ohio during certain seasons. Many modern facilities use a hybrid approach, maximizing free cooling hours while retaining mechanical systems for peak conditions. Installing this equipment requires experienced rigging and crane services to ensure your data center cooling infrastructure is placed safely and efficiently.

Key Strategies for Optimization

The best cooling technology is only as good as its implementation. Effective optimization doesn’t always require massive investment; it often involves practical, actionable steps that improve efficiency and lower costs.

Mastering Airflow Management

Effective airflow management is the bedrock of efficient data center cooling. The goal is to move the right air to the right places. The foundation is hot/cold aisle containment, which uses physical barriers to separate hot and cold air streams. This prevents mixing and allows your cooling units to work more efficiently.

To maximize containment, you must also:

- Install blanking panels in unused rack spaces to prevent cold air from bypassing IT equipment.

- Seal cable openings in raised floors and racks to stop cold air leaks and hot air recirculation.

- Maintain good rack hygiene by managing cables to avoid blocking airflow and ensuring equipment faces the correct direction.

Reducing this bypass airflow ensures every cubic foot of conditioned air does its job, directly improving cooling efficiency.

Smart Design and Construction

Building efficiency into your facility from day one pays dividends for decades. Rack density planning should drive your cooling strategy, helping you determine whether to use air or liquid cooling. High-density racks (15-20 kW+) demand localized cooling solutions that are best included in the initial design.

Thermodynamic layouts consider the placement of CRAC units and aisle arrangements to optimize heat management, while modular designs allow you to add cooling capacity incrementally as your needs grow, future-proofing your investment.

Installing heavy and sensitive cooling units like chillers and CRACs requires specialized expertise. Our team at Sky Point Crane provides comprehensive support for building data centers, including specialized crane use for building data centers. We ensure critical infrastructure is placed safely and precisely, perfectly integrated into your facility’s design.

Operational Tweaks for Big Wins

Simple operational adjustments can deliver significant returns. For years, data centers were overcooled, wasting enormous amounts of energy. Modern IT hardware is designed to operate reliably within the ASHRAE recommended temperature range of 70-75°F (21-24°C). Some studies show that firms waste money keeping temperatures below this range.

By moving away from overcooling and adjusting setpoints to align with these guidelines, you can reduce energy consumption without compromising reliability. This isn’t about taking risks; it’s about operating within the manufacturer’s designed parameters.

Regular maintenance and monitoring are also crucial. Ensuring your cooling equipment is well-maintained helps prevent small inefficiencies from becoming big problems. This ongoing commitment to operational excellence is the foundation of truly efficient data center cooling.

The Future of Cooling: Innovations, AI, and Sustainability

The world of data center cooling is rapidly shifting from reactive to intelligent. We’re getting smarter and more efficient, and finding ways to turn waste into a valuable resource.

The Role of AI in Optimizing Data Center Cooling

Artificial intelligence can now cool data centers more effectively than manual methods. AI processes thousands of data points—temperatures, server workloads, weather forecasts—to make split-second decisions that maximize efficiency.

- Predictive analytics allow cooling systems to anticipate needs based on IT workload forecasts and weather, adjusting proactively instead of reactively.

- Real-time optimization continuously fine-tunes every chiller and fan, adjusting performance dynamically to maintain perfect conditions with minimal energy use.

- Automated control systems remove human error, making autonomous decisions about setpoints and fan speeds. Research advocates for expanding AI use to automate energy efficiency analysis, as detailed in this research on AI in cooling optimization.

Beyond Cooling: Heat Reuse and Recovery

What if the heat we remove isn’t waste, but a resource? Forward-thinking facilities are capturing this heat and putting it to work. Waste heat for district heating is already a reality, where servers warm surrounding homes and businesses. In cooler climates like Western Pennsylvania or Ohio, this makes tremendous sense. Other facilities use recovered heat to warm office spaces or pre-heat water.

The ultimate vision is a circular energy model where the data center is part of a larger energy ecosystem. This aligns with corporate sustainability goals and is highly efficient—heat recovery systems can achieve a Coefficient of Performance (COP) of 7 or higher, meaning for every unit of energy input, you get seven units of useful heating.

Next-Generation Technologies

Innovation in data center cooling is accelerating to handle future heat loads.

- Two-phase cooling uses a fluid that boils as it absorbs heat and condenses to release it, an extremely efficient method for high-density components.

- Hybrid systems combine approaches—liquid cooling for hot racks, air cooling for less demanding areas, and free cooling when the climate allows.

- Geothermal and underground cooling tap into the earth’s stable, cool temperatures as a natural, low-energy heat sink.

- Advanced refrigerants with low Global Warming Potential (GWP) are replacing older, environmentally harmful chemicals to meet tightening regulations.

The future is about intelligent systems, waste heat as a resource, and technologies that work with the environment. These innovations are laying the groundwork for a more efficient and sustainable digital infrastructure.

Measuring Success: Key Performance Metrics

We can’t improve what we don’t measure. Key performance indicators (KPIs) are the vital signs of your data center, helping you track efficiency, catch problems, and demonstrate progress toward sustainability goals for your data center cooling systems.

Power Usage Effectiveness (PUE)

PUE is the industry standard for measuring data center energy efficiency. It tells you how much energy is used for overhead (cooling, lighting, etc.) beyond the IT equipment itself.

The calculation is: PUE = Total Facility Energy / IT Equipment Energy

A perfect PUE is 1.0. Traditional air-cooled data centers typically run between 1.4 and 1.5, while modern liquid cooling systems can achieve a PUE of 1.1 to 1.2. The global average PUE improved from 1.67 in 2019 to 1.55 in 2022. A lower PUE proves your optimization efforts are working and saving money.

Water Usage Effectiveness (WUE)

In many regions, water is as precious as electricity. WUE measures how much water your data center consumes for cooling relative to the energy your IT equipment uses.

The formula is: WUE = Annual Water Usage (Liters) / IT Equipment Energy (kWh)

Lower numbers are better. A WUE of 0 means no direct water consumption for cooling. This metric is critical in water-stressed regions, including parts of Western and Central Pennsylvania, Ohio, West Virginia, and Maryland. Traditional evaporative cooling towers have a high WUE, while free air cooling can achieve a WUE near zero. Adiabatic systems are also highly efficient, using up to 90% less water than traditional towers. Tracking WUE is becoming a business necessity.

Other Important Metrics

PUE and WUE provide the big picture, but other metrics offer deeper insight:

- Energy Reuse Effectiveness (ERE) measures how much waste heat you capture and put to good use, such as for district heating. A higher ERE signifies a more circular energy model.

- Carbon Usage Effectiveness (CUE) connects your energy consumption to its environmental impact by measuring total carbon emissions relative to your IT energy use.

By tracking these metrics, you get a complete picture of your data center’s performance, helping you make decisions that benefit both your bottom line and the environment.

Conclusion: Building a Sustainable and Efficient Future

We’ve seen that when it comes to data center cooling, the old ways won’t work for tomorrow’s demands. With data centers projected to consume up to 13% of global electricity by 2030 and processor heat loads soaring, the industry is at an inflection point. The shift to liquid cooling (PUE 1.1-1.2) for high-density loads is becoming essential. Smart design, including hot/cold aisle containment and optimized temperature setpoints (70-75°F), can yield energy savings of 10-13% or more. And AI is changing cooling from a reactive process into a predictive, self-optimizing system.

Looking ahead, sustainability is the primary driver of innovation. Heat reuse is turning waste into a resource, and advanced refrigerants are protecting our climate. The data center of tomorrow will be a circular energy system, contributing to its community instead of just consuming resources.

Here’s the reality: building or upgrading a data center to incorporate these advanced cooling systems is complex work. Massive chillers, CRAC units, and liquid cooling components are heavy, sensitive, and require exact placement. A single miscalculation during installation can compromise years of careful planning.

That’s where our expertise comes in. At Sky Point Crane, we’ve spent over 30 years handling critical infrastructure across Western and Central Pennsylvania, Ohio, West Virginia, and Maryland. We understand that installing data center cooling equipment isn’t just about lifting heavy objects—it’s about precision, safety, and ensuring every component is positioned for optimal performance.

Our NCCCO certified operators and comprehensive 3D Lift Planning services mean your cooling infrastructure gets installed right the first time, minimizing downtime and maximizing efficiency from day one. We’re not just moving equipment; we’re helping you build the foundation for a sustainable, efficient future.

Ready to take the next step? Plan your next data center project with our expert crane services and let’s build something remarkable together.

We’re here to support every aspect of your project with specialized services designed for complex industrial work. Our 3D Lift Planning ensures precision and safety before any equipment leaves the ground. Our rigging signal persons provide seamless coordination for even the most intricate lifts. And when you need secure storage for your equipment, our industrial storage solutions—both indoor and outdoor—keep everything protected and ready when you need it.

We serve diverse markets and industries with an unwavering commitment to safety, health, and environmental responsibility. Our equipment capabilities are matched only by our dedication to getting your project done right.

The future of data centers is efficient, sustainable, and remarkably cool. Let’s build it together. Explore our full range of crane services and find how Sky Point Crane can make your next project a success.